Today’s digital life is advancing rapidly, and AI is being used by everyone, adults and children alike. Whether it’s for students’ studies or creators’ video and photo editing, AI is being used everywhere and has become a part of our daily lives. However, these things have advantages, not disadvantages. Because of AI, people are unable to distinguish between real and fake things. It has become difficult to identify whether an AI-edited photo is real or fake. And keeping this threat in mind, companies like YouTube, Facebook, Instagram, and Threads have introduced a completely new feature, which will show a label on photos created and edited by AI, allowing people to know whether it is real or fake.

These changes are not being made just like that. According to the Indian government, any content created by AI on social media will have to state that it was created by AI. For this reason, these platforms are introducing tools that will allow people to identify AI content. This change will affect everyone, whether it is creators or audiences. This will make using social media even more different than before.

Why These Labels Were Needed

The use of AI has grown significantly in recent times. There are even AI tools that can create a real face, copy someone’s voice, and edit real videos so that people can’t tell whether they’re real or fake. This leaves many people feeling distressed and confused.

And social media platforms have realized that people are now becoming increasingly upset by AI content. Creators can use creative tools to enhance their content, but they should also disclose which content is AI-generated and which is not. And for this reason, major companies like Meta and YouTube have started labeling AI content.

These labels aim to achieve three big goals:

- Protect people from deception.

- Prevent the spread of fake information and deepfakes.

- Maintain trust in digital media during crucial times like elections.

How These New AI Labels Work

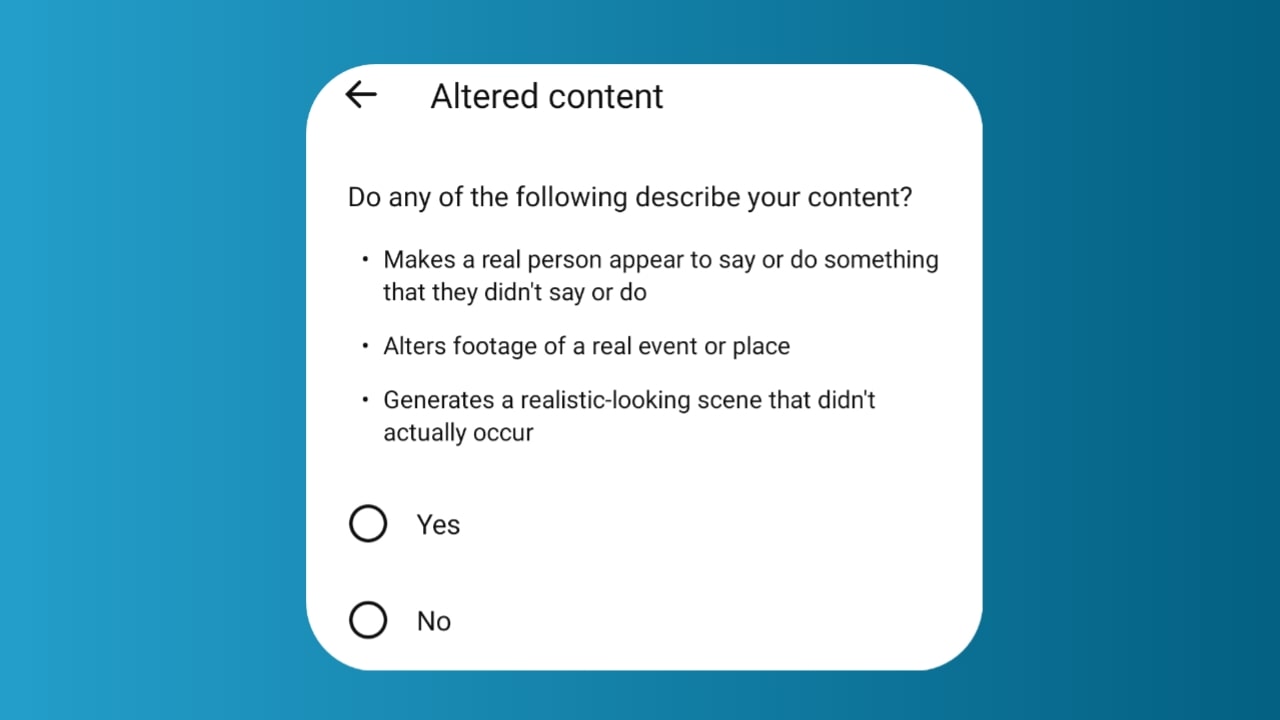

Mandatory Disclosure

According to Google and YouTube, if any creator uploads photos or videos using AI, they must clearly state that it is AI content.

- Uploading fake information or videos.

- Attributing things about a real person that they have never said.

- Editing fake videos in such a way that people misunderstand whether they are real or fake.

That’s why even major platforms like Meta and YouTube are now introducing tools to expose AI content.

Automatic Detection

Meta and YouTube are also developing AI systems that can automatically identify whether a video or photo has been altered by AI. These systems use:

- Invisible Watermarks

- Metadata Tracking

- C2PA and IPTC Standards

This means that even if the creator doesn’t disclose that the content is AI-created, the platform can still identify it.

Visible Labels

You may now see tags like these in your feed:

- “AI-generated image”

- “Digitally altered content”

- “Imagined with AI”

These labels will appear on posts worldwide, in every language, so people can quickly understand whether the content is real or created with AI.

My Experience With the AI Labeling Feature

When I first saw the words “Imagined with AI” on Instagram, it felt both strange and nice. For months, I’d been seeing overly realistic celebrity photos, fake travel photos, and videos that seemed so perfect. Sometimes they were funny, sometimes I was doubtful whether they were real or not. But now, with the label, my confusion has diminished significantly.

What I like most is that everything is clearer now. Social media influences our perceptions, and when I know a photo or video is created by AI, I feel confident about what I’m seeing. This also helps me post wisely. If I create an AI-based photo, my followers understand by looking at the label. This prevents unnecessary questions.

Another good thing is that I can now quickly identify deepfakes. Previously, if a video looked even slightly edited, I’d wonder, “Is this really true?” But now, seeing the labels helps me make quick decisions. This makes me feel more secure during times like elections or important news.

And best of all, these labels don’t stifle AI’s creativity. They simply portray things honestly. People who want to create AI-inspired photos or videos for fun can still do so; they just clearly state that they’re AI-created.

Why This Update Matters for the Future

As AI advances, AI-created photos and videos will become so realistic that it will be difficult to distinguish between real and fake. Therefore, it’s crucial to develop rules and technologies that work globally.

Meta is now working with major companies like Google, OpenAI, Adobe, and Microsoft to create consistent, reliable rules. This includes things like invisible watermarks (which are invisible), unique identifiers, and smart AI tools that can detect fake content. All of this will help make the internet safer.

This is also important because some people will always try to exploit unfair means. Therefore, social media platforms must stay one step ahead of them. Labeling AI content is a very good and strong first step in this direction.

Also Read:

Conclusion

Labeling AI content on YouTube, Facebook, Instagram, and Threads is a major and important change. It will make things on the internet appear clearer and more honest than before. This feature strikes the right balance between user safety, creativity, and responsibility.

As a user, things now appear clearer, safer, and more trustworthy. As these tools become more intelligent, the internet environment will also improve, one where people can create creative content, while at the same time, the truth remains protected.